How to make an iOS app for tracking face with AR

As a AR beginner, I'm getting started with augmented reality in Swift. I will show a few steps about how to build the first AR App in the below.

Let's dig in ...

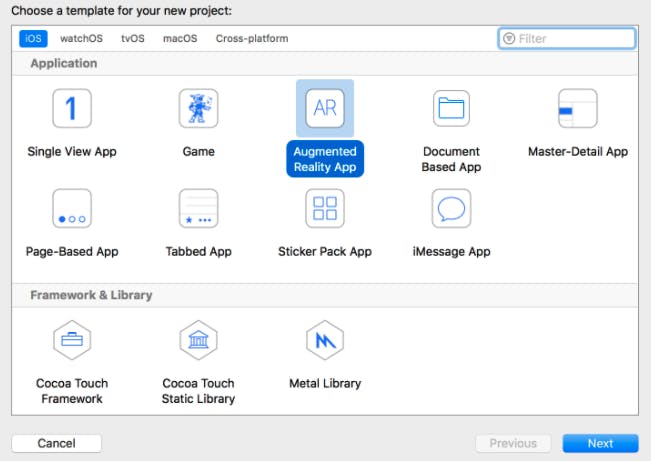

Step 1: Open up Xcode and choose the Augmented Reality App template -

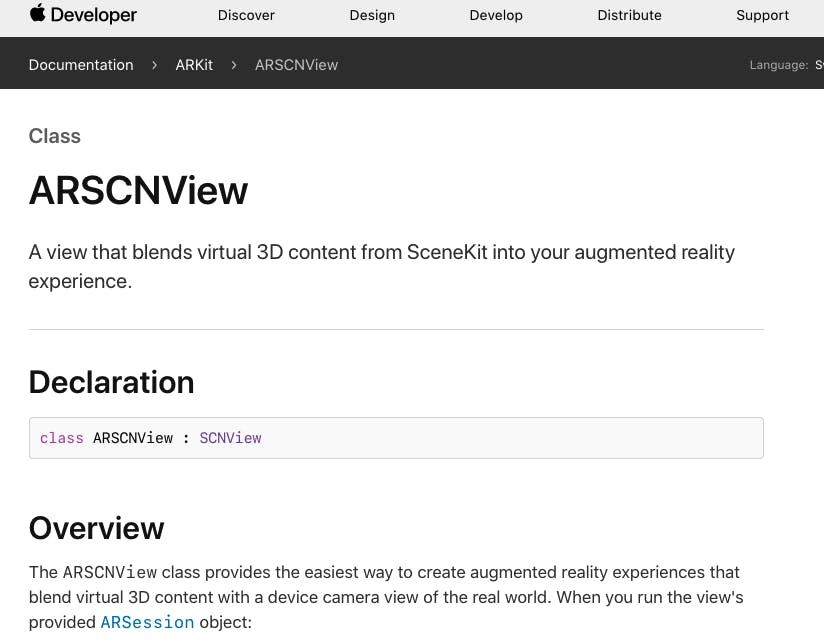

Step 2: Short Introduction about a view of ARSCNView -

The Main.storyboard contains a single view controller which has a main view of type ARSCNView. This view is a special view used for displaying scenes that blend the device’s camera view with virtual 3D content.

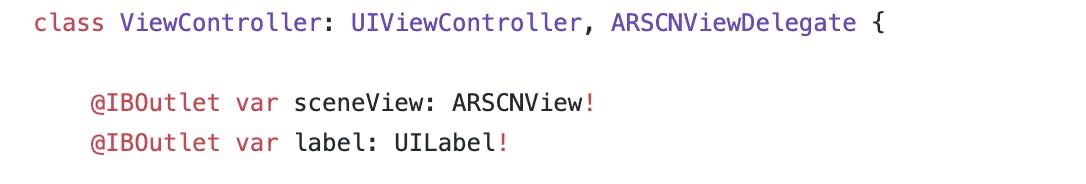

Step 3: Adding a Label for creating an action button -

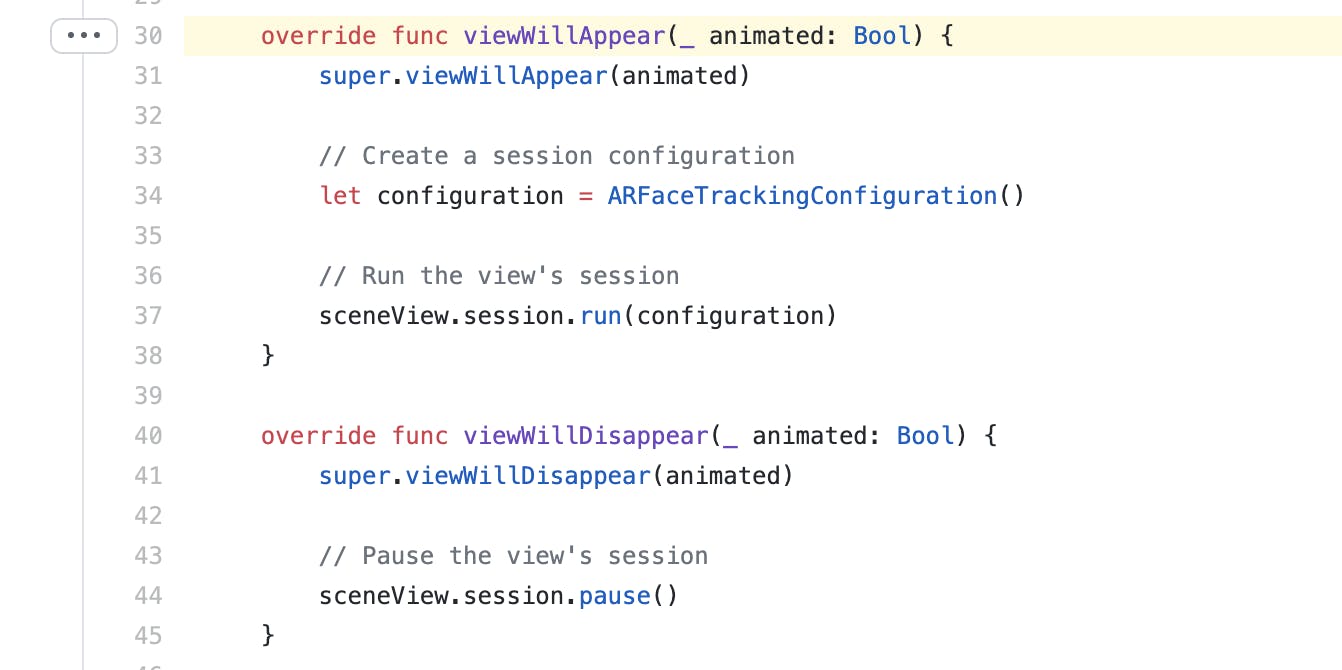

Step 4: Codes in the func viewWillAppear -

ARFaceTrackingConfiguration() is a configuration that tracks facial movement and expressions using the front-facing camera.

sceneView.session.run(configuration) is to run this motion which is tracking by front-facing camera

sceneView.session.pause() is to pause this motion

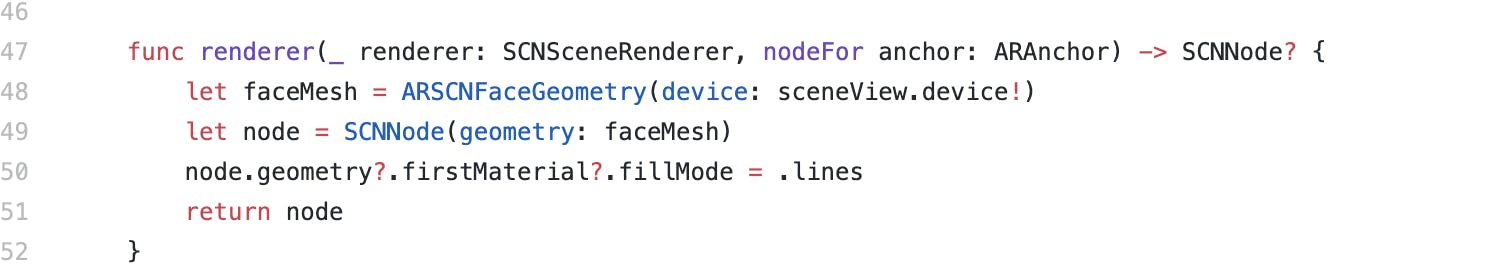

Step 5. Codes in the func renderer(_:nodeFor:) -

Creating a face geometry "faceMesh" for rendering with the specified Metal device object "device: sceneView.device!"

Returning a new SceneKit node, which ARKit will add to the scene and update to follow its corresponding AR anchor.

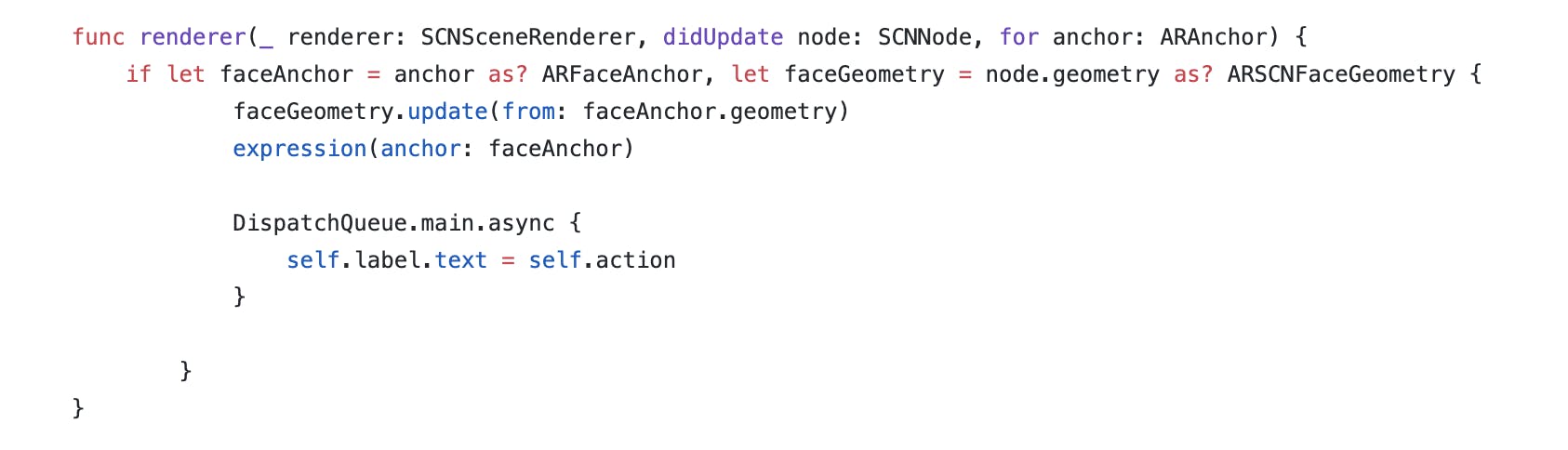

Step 6. Handling Content Updates by func renderer(SCNSceneRenderer, didUpdate: SCNNode, for: ARAnchor) -

Tells the delegate ARSCNView that a SceneKit node's properties have been updated to match the current expression(anchor: faceAnchor) of its corresponding anchor. You also can see different text by different actions: self.label.text = self.action

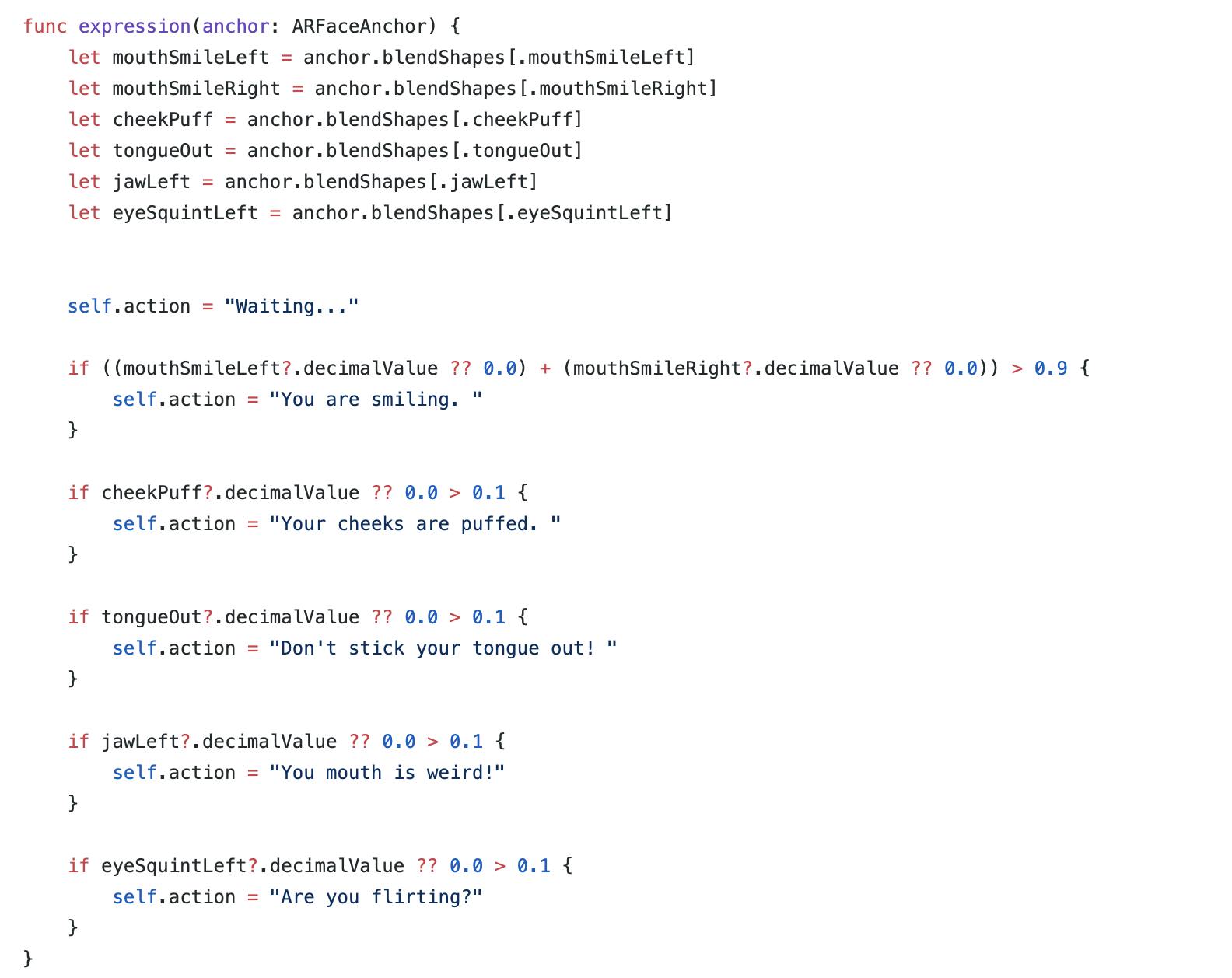

Step 7 : Analyzing your expression by ARFaceAnchor -

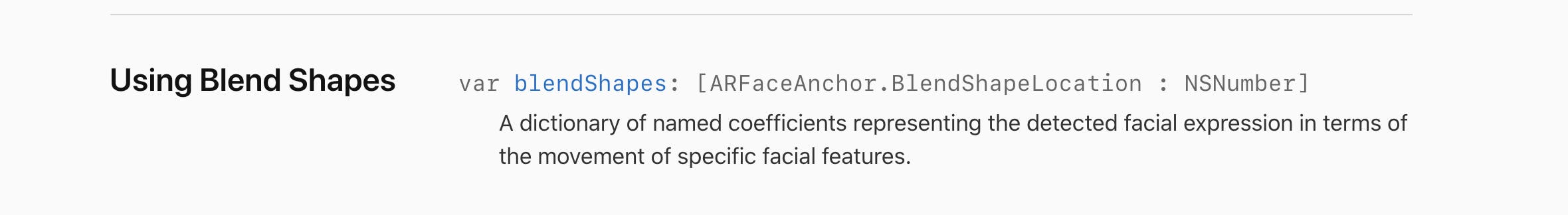

In the func renderer(SCNSceneRenderer, didUpdate: SCNNode, for: ARAnchor), we will call the func expression(anchor: ARFaceAnchor), using blend shapes to measure facial movement.

Github link from Prof Vladimir Cezar

Thank you Vladimir Cezar !!!